The Deepwater Frontier Tech Index aims to provide investors with exposure to frontier tech themes that will transform our lives over the next 3-5 years. Go deeper.

This is a series of monthly posts providing an update on performance and sharing what’s on our mind in frontier technology.

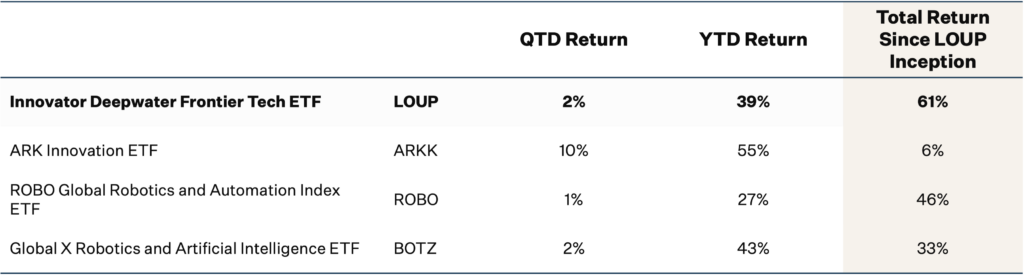

Performance Update:

What is On Our Mind?

Understanding AI: LLM Basics for Investors

Doug is publishing an AI series. Below is an excerpt from his piece about what investors need to know about how large language models (LLMs) work to make informed AI investments.

How ChatGPT Works

To make intelligent investments, an investor should have an understanding of how a company’s product works. Even if it’s a technical product like AI. With scorching investor excitement around AI, we should all make sure we understand the basics of what we’re investing in.

Here are the basics on large-language models (LLMs) like ChatGPT that are driving the AI boom.

What is an LLM?

A large-language model is a neural network that is trained to infer what words should come next in a sequence based on some prompt.

As we’ve detailed before, LLMs don’t “understand” the meaning of text like a human does. Instead, LLMs understand a text through statistical interpretation by learning from vast amounts of data. When an LLM answers a user’s question, it’s making an intelligent guess about what words and sequences of words make sense to come next given the question and the existing sequence of the answer so far.

There are several AI-specific terms in our LLM definition: neural network, training, inference, and prompt. I’ll define each in a simplistic way.

Neural Network

A neural network is a computer algorithm trained to recognize patterns. A neural network consists of many interconnected nodes — like neurons in the human brain — of varying weights and biases that determine the output of the model. The weights and biases in a neural network are known as parameters.

LLMs are often sized by the number of parameters in the model. More parameters suggest a more complex model. OpenAI’s GPT-4 reportedly has one trillion parameters vs 170 billion parameters in GPT-3. Google’s PaLM2 is trained on 340 billion parameters.

One of the reasons language-based AI seems further advanced than vision is the size of the models. The largest vision transformer model is Google’s recent ViT-22B with 22 billion parameters, one-fiftieth the number of parameters of GPT-4. Tesla’s neural network for autonomy had one billion parameters as of late 2022. Vision is a different function than language, and it may not require as many parameters to break through like GPT, but it seems logical to believe more parameters in vision would lead to better robots.

How Can I Invest in the Index?

Deepwater partners with Innovator ETFs to offer the NYSE-listed Innovator Deepwater Frontier Tech ETF. The ticker is LOUP.

To learn more about how to invest, visit the Innovator website.