This is the third in a series of notes based on our deep dive into computer perception for autonomous vehicles. Autonomy is a question of when? not if? In this series, we’ll outline our thoughts on the key components that will enable fully autonomous driving. See our previous notes (Computer Perception Outlook 2030, What You Need To Know About LiDAR).

If a human can drive a car based on vision alone, why can’t a computer?

This is the core philosophy companies such as Tesla believe and practice with their self-driving vehicle initiatives. While we believe Tesla can develop autonomous cars that “resemble human driving” primarily driven by cameras, the goal is to create a system that far exceeds human capability. For that reason, we believe more data is better, and cars will need advanced computer perception technologies such as RADAR and LiDAR to achieve a level of driving far superior than humans. However, since cameras are the only sensor technology that can capture texture, color and contrast information, they will play a key role in reaching level 4 and 5 autonomy and in-turn represent a large market opportunity.

Mono vs Stereo Cameras. Today, OEMs are testing both mono and stereo cameras. Due to their low-price point and lower computational requirements, mono-cameras are currently the primary computer vision solution for advanced driver assistance systems (ADAS). Mono-cameras can do many things reasonably well, such as identifying lanes, pedestrians, traffic signs, and other vehicles in the path of the car, all with good accuracy. The monocular system is less reliable in calculating the 3D view of the world. While stereo cameras can receive the world in 3D and provide an element of depth perception due to dual-lenses, the use of stereo cameras in autonomous vehicles could face challenges because it’s computationally difficult to find correspondences between the two images. This is where LiDAR and RADAR have an edge over cameras, and will be used for depth perception applications and creating 3D models of the car’s surroundings.

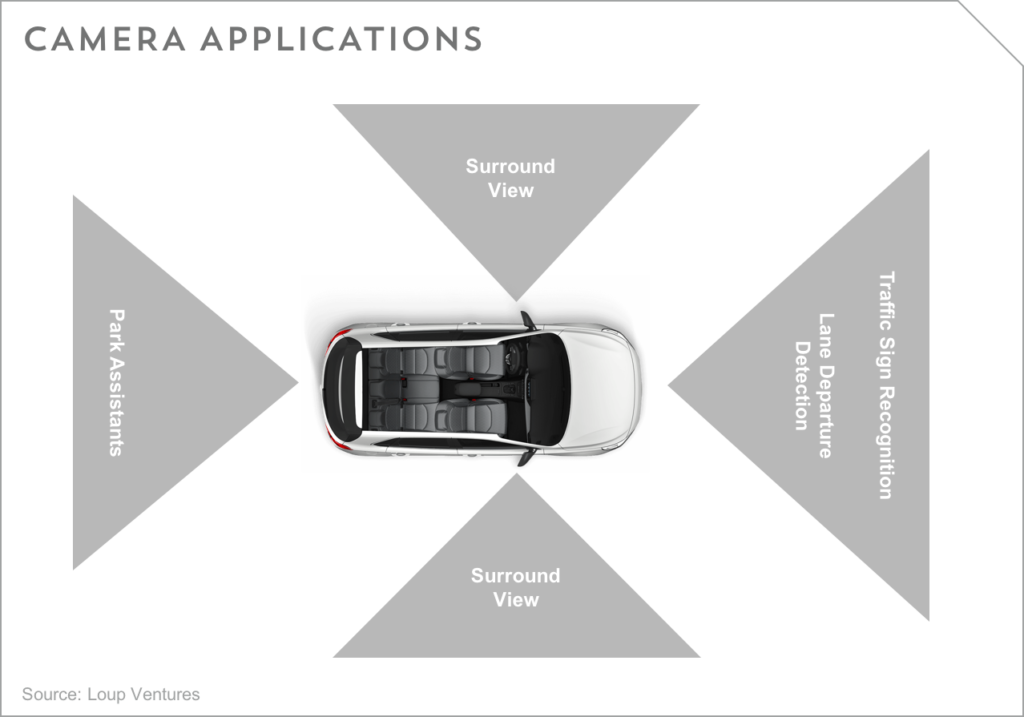

Camera Applications. We anticipate Level 2/3 and Level 4/5 autonomous passenger cars will be equipped with 6 – 8 and 10 – 12 cameras, respectively; most will be mono-cameras. These cameras will play a prominent role in providing nearly 360-degree perception and performing applications such as lane departure detection, traffic signal recognition, and park assistance.

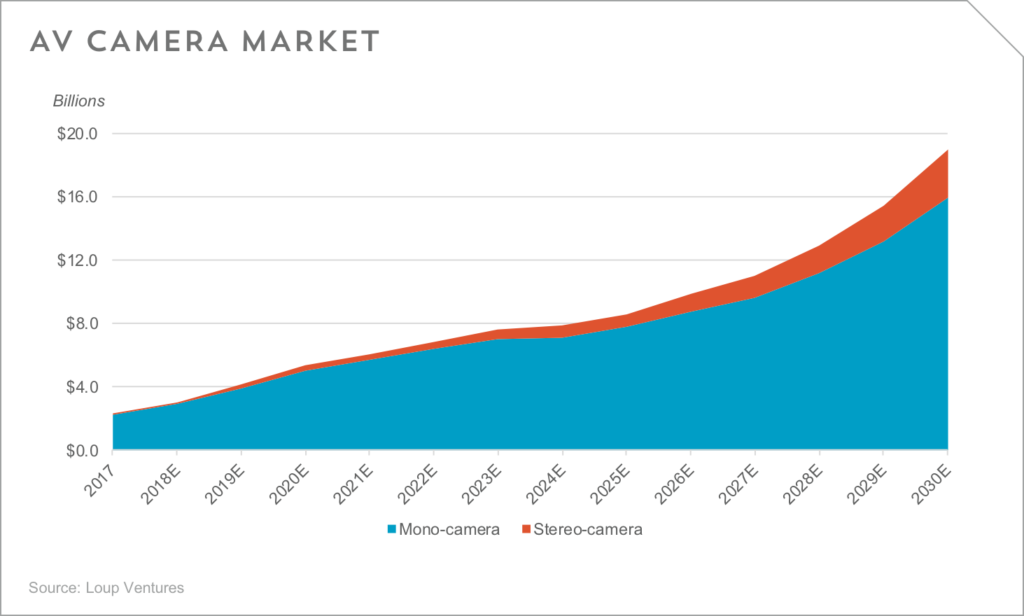

$19B Market Opportunity. Given cameras are the primary computer perception solution for advanced driver assistance systems (ADAS), the camera market is currently the largest computer perception segment and represented a $2.3B opportunity in 2017. While growing adoption of ADAS enabled cars will continue to act a near-term catalyst, adoption of fully autonomous vehicles (Level 4/5) equipped with 10+ units per car, will be the tailwind taking this market to $19B by 2030 (18% CAGR 2017 – 2030). As displayed in the chart below, the bulk of sales will be in the form of mono-camera systems. Note our forecast is driven by our 2040 Auto Outlook and only includes passenger vehicles. Fully autonomous heavy-duty trucks will also leverage similar computer vision technology, and when factoring these sales, the total camera market could be 1.5x- 2x larger.

Disclaimer: We actively write about the themes in which we invest: virtual reality, augmented reality, artificial intelligence, and robotics. From time to time, we will write about companies that are in our portfolio. Content on this site including opinions on specific themes in technology, market estimates, and estimates and commentary regarding publicly traded or private companies is not intended for use in making investment decisions. We hold no obligation to update any of our projections. We express no warranties about any estimates or opinions we make.