The run in AI-related stocks will prove overdone in the near term, but AI will prove even bigger than people think in the long-term. That’s a high bar given the hype.

Contradictory beliefs can signal contrarian investment opportunities. AI is the next Internet, but markets often front run the impact of new technologies before they translate into financial benefit.

Stock prices are supposed to represent all future cash flows an asset will generate discounted back to the present, but I’ve never found the market to function as such. Stock prices in the short to medium term are more influenced by flows, liquidity, and the next 6-18 months of fundamental performance, not the rest of time. That’s what creates opportunities to invest in persistent growth companies — markets lose faith in the ability of companies to grow faster and longer than reasonable because of near-term headwinds in the 6–18-month range.

As the market realizes that meaningful AI revenues are further off for many companies, AI premiums should wear off and these stocks trade down.

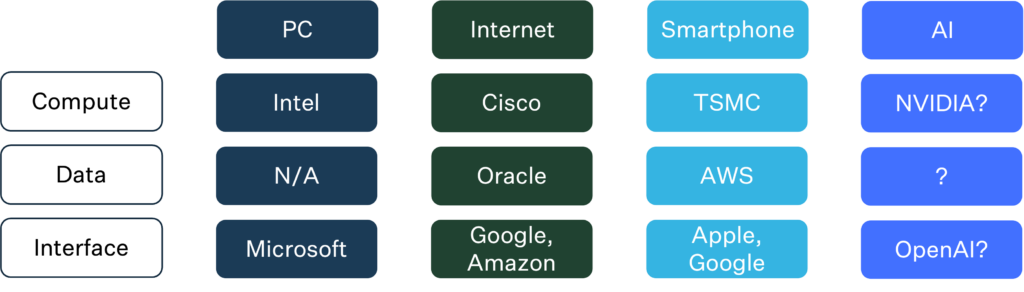

This is not a bearish view, only a rational one. AI will create great long-term investment opportunities just like other computer-driven paradigm shifts before it. To assess the longer-term real opportunities in AI, not just recent hype, I use a mental model for investing in paradigm shifts inspired by the PC, Internet, and smartphone.

Compute, Data, Interface

Every computer-driven paradigm shift depends on the convergence of three elements: compute, data, and interface.

Compute is where calculation is done by machines to create value for customers. Data is the information available for the system to calculate. Interface is how end users interact with the system.

Identifying these elements of a technology paradigm shift is not some profound insight, but it is useful to form a framework for investors to consider from first principles what enables companies to win big from the shift. The enabling technologies of our model paradigm shifts are different, but the demands from each element of breakthrough are not. Companies that successfully addressed the specific needs of their category in prior paradigm shifts have become some of the biggest companies in the world and the best persistent growth investments in history.

I think my mental model that describes winners of past paradigm shifts will prove usable for AI too.

Compute

Compute is the base hardware layer for machine interaction. The cheaper we can perform the desired action, the more scale available to that action. Therefore, evolutions in compute are almost always about cost efficiency.

Intel won the chip wars in the 80s and 90s because it achieved vastly superior volumes for x86 chips vs RISC-based chips. Superior volumes meant lower prices for chips and ultimately the PCs powered by them.

Cisco won “compute” for the Internet through an early recognition of the importance of switching, which lets more data travel through a connection. More data transfer meant more efficient Internet traffic.

TSMC won the smartphone era as an independent chip maker for Apple and other smartphone makers, creating efficient scale amongst many chip designers and multiple operating systems. ARM deserves an honorable mention as a compute winner for the smartphone as the energy-efficient architecture proved to be perfect for smartphones with limited power.

So far, NVIDIA is running away as the AI compute beneficiary because its GPUs are far more efficient at AI tasks than CPUs. The company likely has 80-90%+ of AI workload market share, and that won’t change unless another company figures out how to drive 100x greater efficiency beyond Nvidia. The only way I think this might happen is via chips purpose built for AI processing.

Many companies have emerged to develop chips specialized for AI processing, including an investment of ours, Rain.

We’ll see what happens. Absent some breakthrough in efficiency, NVIDIA likely maintains the winning role in AI compute.

Data

Data started as the least important element of technological paradigm shifts.

In the PC era, data was largely a commoditized local concern. While companies like Seagate and Western Digital built on the data need in PCs from floppy disks to hard drives, neither established themselves as a persistent growth company because the nature of the industry didn’t support it.

The Internet granted more power to data. A useful network relies entirely on the transference of data. Larry Ellison and Oracle knew this, and it was Oracle’s focus on the Internet that cemented it as a dominant player in databases to this day.

The smartphone era brought an even greater demand for data access and usability via end applications. It’s no accident that the cloud era coincided with the smartphone era. Amazon’s AWS cloud offering was the winner in data for the smartphone/cloud era. While AWS could also be considered a compute offering, the platform started with data, and the value of cloud is in allowing for compute on data in the cloud.

The evolution of data’s importance in technological paradigm shifts tells us that the data element is won by improving accessibility and usability. Oracle and AWS made data more broadly accessible to and usable by developers creating applications for end users.

To that end, I think of the data category as built on three elements in the AI paradigm: cloud for accessibility and models and data management for usability.

Cloud

Cloud for AI is dominated by AWS and Azure. Like Nvidia, it seems likely that the hyperscalers maintain dominance here unless some new cloud solution emerges that enhances accessibility of data by 10x+. The hyperscalers probably benefit from a persistent growth tailwind going forward and may be the best way to play the cloud AI opportunity.

Models

Models make data usable for applications. OpenAI’s GPT seems the favored model now, but there are many other contenders from Google, Facebook, and several startups. There are also task specific models like Tesla’s Autopilot AI and Waymo for self-driving.

The interesting debate on models is whether they are a commodity or the most valuable piece of the entire stack. The commodity argument suggests that there are dozens of usable AI models available to developers. The most valuable argument would be that one model evolves toward general intelligence, making all other models irrelevant. Both ideas may be true. Models may be a commodity in the near-term, but the most valuable thing in the long term if a general intelligence emerges.

Data Management

Data management also enhances data usability and feels like the most untapped opportunity in AI. Companies like Palantir have been helping companies manage data for more than a decade. Earlier stage companies like Invisible (a Deepwater portfolio company) and ScaleAI help ensure data usability through human correction. Pinecone provides a vector database service that also improves data usability.

Data management is probably the most under invested, although it may also end up being the smallest absolute opportunity across the categories in the mental model.

Interface

The interface layer is how we as consumers experience paradigm shifts. That makes interface companies popular destinations for investors. And for good reason.

Owning mass scale customer relationships creates incredible value.

Just look at the interface winners. They’re four of the five biggest companies in the world.

Microsoft won the PC interface and used that position to develop Office. Google and Amazon won as interfaces to information and commerce, respectively. Apple and Google won as the interfaces that powered the smartphone revolution. All remain dominant players in the arenas they won.

In the AI paradigm, OpenAI’s ChatGPT captured the world’s attention and imagination, adding 100 million users in just a couple of months. It’s hard to argue they aren’t the favorite to emerge as the most valuable interface company in AI.

I wouldn’t bet against OpenAI, but I would argue something different than conventional wisdom.

Unlike prior paradigm shifts, interface companies that already have technology-oriented relationships with customers are likely to retain those relationships by introducing new AI products rather than be disrupted by upstarts.

As consumer and enterprise companies rush to add AI capabilities to current product suites, it will be difficult for startups that pitch AI marketing automation or AI salesforce management to breakthrough against HubSpot or Salesforce. It seems more likely that customers get integrated deeper with existing providers that add AI than move on to new providers simply because they promote AI.

If you agree with the interface hypothesis, it suggests two outcomes. First, it will be harder to find startups that can breakthrough at the interface layer in AI than in prior paradigm shifts. That doesn’t mean there won’t be any, but I’d bet we’ll see fewer Airbnb or Uber type outcomes this time around as existing players eat those opportunities. Second, if existing players keep customers engaged with AI, it could create a persistent growth tailwind provided the addition of AI creates incremental value to be shared with the customer.

And that’s perhaps the most important part of any mental model for investing.

As Richard Branson said, “A business is simply an idea to make other people’s lives better.”

AI doesn’t matter much to customers if it doesn’t help them do more with less. The greatest tech companies are all time machines. They save users time or money, which is a store of time. For all the amazing potential of AI, if an AI company can’t save users time or money, it won’t be very valuable.