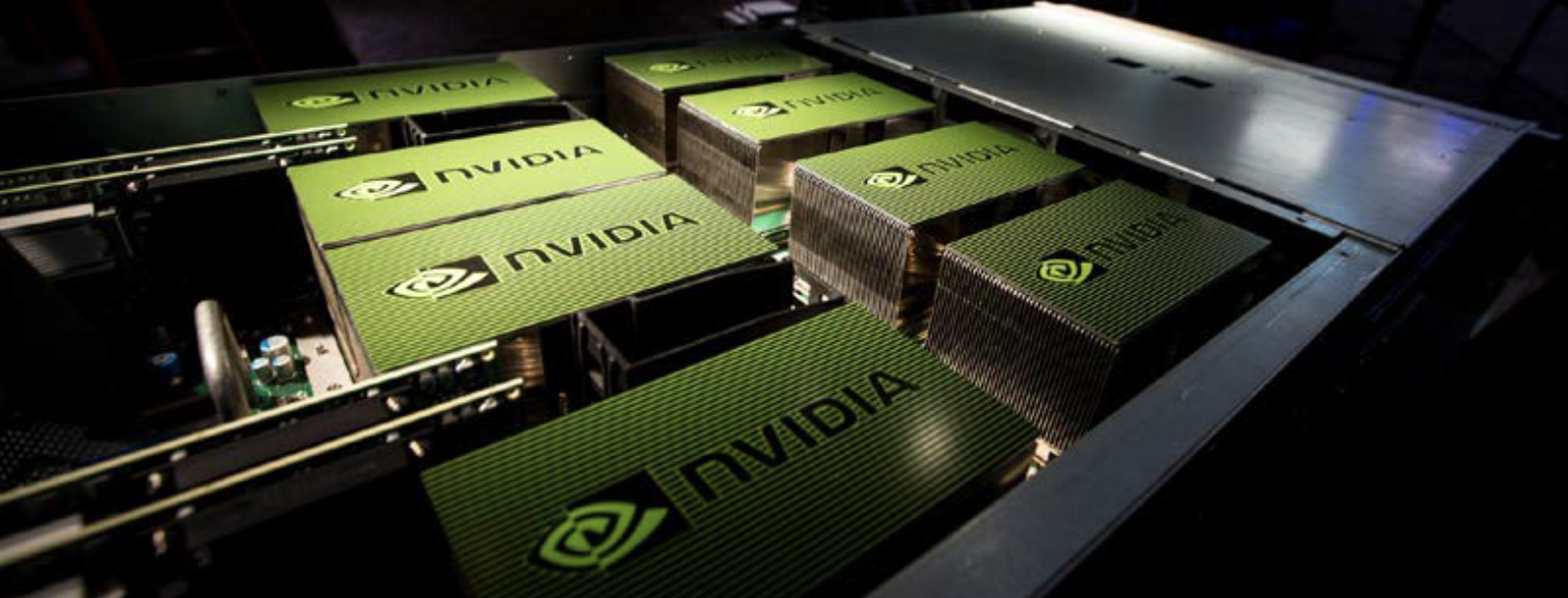

We recently ran through the top-performing stocks in the S&P 500 for the last 15 years. At the very top were some household names like Netflix >12,000% returns, Apple >10,000% returns, revolutionizing the way people experience the world, and Amazon >5000% returns, led by the wealthiest person on the planet today. Little overlooked is the fabless semiconductor design company Nvidia which achieved >5000% share price gains in the period, even off 48% from its 2018 highs. Nvidia achieved this on the back of its Graphics Processor Unit (GPU) architecture originally developed to render PC graphics but has evolved to become the default processing engine in areas like virtual reality (VR), high-performance computing (HPC), and artificial intelligence (AI) including machine learning (ML).

Nvidia’s Next Five Years

Intel tweeted in June that it is on track to release its first dedicated GPU graphics card of this century. The company released its last discrete graphics card, the i740, in 1998 which was not a commercial success, although the technology from the i740 continues to live in Intel’s integrated CPU cores. In the mid-2000s, Intel had a second attempt to enter the discrete graphics processor with a project code-named ‘Larrabee.’ The product was never released due to price/performance issues. Today, with the massive growth of the cloud computing market and GPUs playing a central role in many AI intensive cloud computing applications, there are significant incentives for Intel to continue to invest in this space.

Intel just sold off its modem business to Apple, essentially exiting the smartphone modem market (Intel does not have a cellphone processing chip) and leaving Qualcomm and MediaTek as the only platform System On Chip (SOC) semiconductor players in the space. Nvidia smartly exited the smartphone chip market in 2014 when it ended its Tegra line of products, avoiding a bruising battle with Qualcomm and potentially losing billions of dollars in the process. For Intel and Nvidia exiting these markets was the right move shifting the development focus away from slowing smartphone segment to higher growth opportunities. The growth segment Intel and Nvidia are pursuing is the data center back end processing of everything from gaming, AI, mobility, AR and VR applications which will grow exponentially with the coming wave of 5G and connected devices.

The Threat of New Multibillion-Dollar Silicon Design Startups

While Nvidia is well-positioned to capitalize on the above themes, there are well-funded custom silicon startups that pose a new threat to their dominance. Since Nvidia’s GPUs were never designed to just handle machine learning algorithms, there is room for new silicon design startups like Loup portfolio company Rain Neuromorphics, Wave Computing ($203m raised), Cerebras Systems ($112m raised), Graphcore ($310mraised), SambanNova ($56m raised), Mythic Inc ($55m raised), Cambricon Technology ($110m raised), Horizon Robotics ($700m raised), and Deephi ($40m) to come up with original architectures. If we expect an aggregate 10% returns from well known Silicon Valley VC funds backing these startups, we should expect another multi-billion dollar silicon design competitor in this space in the next 5 years.

The Threat of Incumbent Cloud Platforms

An even greater threat to Nvidia’s ability to maximize its returns comes from the incumbent cloud platforms. In 2016, Google announced their propriety tensor processing units (TPUs), an application-specific integrated circuit (ASIC) chip built specifically for ML tasks. Since then both Microsoft, Amazon, and Facebook have announced their own silicon design efforts for ML for improving everything from natural language processing to image recognition applications. These cloud leaders have a different business model than Nvidia or Intel. Google offers access to their TPU servers on an hourly basis, accessed through the Google cloud platform. Last year Amazon announced their own proprietary ARM-based Graviton processors which are offered through the AWS Nitro System platforms. Amazon’s stated one goal for designing their own processors is to gain bargaining power with Intel and Nvidia when building new data centers. In China, Alibaba, Tencent, and Baidu have announced their own in-house chip design initiatives. What these cloud players excel at is a deep understanding of the software stacks that run on these platforms.

It’s still early days in the development of custom design chips for AI and ML, and the mega internet companies have little hardware DNA to advance a proprietary offering. Hardware is a game of intellectual property and continuous design execution, different than the game of building network effects in an internet platform. It is not evident yet which core competency will result in lasting competitive advantage in capturing shareholder value in the data center space.

Disclaimer: We actively write about the themes in which we invest or may invest: virtual reality, augmented reality, artificial intelligence, and robotics. From time to time, we may write about companies that are in our portfolio. As managers of the portfolio, we may earn carried interest, management fees or other compensation from such portfolio. Content on this site including opinions on specific themes in technology, market estimates, and estimates and commentary regarding publicly traded or private companies is not intended for use in making any investment decisions and provided solely for informational purposes. We hold no obligation to update any of our projections and the content on this site should not be relied upon. We express no warranties about any estimates or opinions we make.