Neurotech for Control

In the first part of this series on Consumer Neurotech, we established a framework for thinking about Consumer Neurotechnologies and their value propositions. With the initial groundwork laid, we can finally dig into the technologies themselves. Here, in Volume II, we’ll discuss neurotechnologies that have the value proposition of Control (or, using our taxonomy from Volume I, neurotechnologies whose “Effect-type” is Control). Within the scope of this piece, this encompasses two categories: Commoditized EEG and EMG Human-Computer Interfaces.

It’s always useful to convey intuition about technology before discussing it. In this spirit, we present short fictional scenarios that describe a hypothetical neurotechnology in situ. The narratives intentionally take literary and science fictional license, while staying well within the realm of what we believe will come to market within the next 5-10 years.

Commoditized EEG

Fiction

Your phone buzzes; frenzied, you grab your notebook off the table and throw it into your shoulder bag. You have one foot out the door when you realize why your bag feels so light: it’s missing the laptop which, in the aftermath of last night’s Wikipedia binge, must be wedged between couch seats. Glancing at your phone, you see that the autonomous Uber fleet car will wait another 76 seconds, and this number stays seared into your mind’s eye as you start racing back into the living room and make a dive into the couch cushions, expertly fishing out your computer. You’ve got it in your bag; 25 seconds left, now. You run outside, open the backseat door of the fleet car, and as you exhale and situate yourself into the seat with two whole seconds to spare, you catch the sun outside glancing off a shiny, small piece of metal in the headrest. You close your eyes, buckle, and rest your head back. The electrode embedded into the headrest establishes effortless contact with your scalp, and starts recording neural signals. Behind the scenes, an algorithm notices you have patterns of brain activity that correlate with stress, and responds by darkening the windows. Your last thought before slipping into a rejuvenating nap is that yes, good job, car, the Chopin piece is a great choice, how did you know?…

• • •

As a general policy, you don’t check personal notifications at work. Back when you were working your first job out of school, there was a particularly grueling performance review, the theme of which was, “Your productivity needs to improve, or we’re going to let you go.” You left that meeting and knew exactly where all the wasted time went: you’d been handing your time out left and right to the rectangular notifications floating into and out of the top-right corner of your MacBook Pro. Plans for the weekend; coordinating the date for tomorrow night; cat GIF. You were terrified, and subsequently enacted your policy. It suffices to say your productivity improved dramatically thereafter. Today, nearer to a decade later than you’d like to acknowledge, you find yourself still on the defensive with notifications, even with these new-fangled augmented reality glasses. Last year, your employer introduced the glasses for you to use as you sit at your desk and tinker with the electronics for the toy company’s smart stuffed animal. They’re pretty useful, actually: reference documents float wherever you want, making the building process much smoother. There are also, of course, the notifications, which you religiously ignore. It’s 2pm, and a little “ding” flows through the headset and into your ears. It’s a notification. You’re well-trained to ignore these by now, but you catch the name, and see that it’s your son. Strange, you think. Doesn’t he have class right now? With one hand holding a solder and another holding the breadboard in place, you focus on the notification for the quick fraction of the second the system needs, and now the box slides over. Floating above the middle of your desk, it unfolds into an open message: “Hi, I have a fever, can you come pick me up from school please?”

• • •

Electroencephalography (EEG) has been used widely since it was first described by Hans Berger in 1929. EEG plays many roles in medical diagnostics, but increasingly it’s used as a control component in brain-computer interfaces. The promise of using brain signals to control technology is the stuff of science fiction, and many are slowly working to make this a reality. Within a decade or so, we think EEG technology will become fairly commoditized; in this section, we discuss products working towards that goal.

In the first fiction scenario above, an EEG sensor is built into an autonomous car so the vehicle can adjust parameters like lighting and music to support the emotional state of the rider. In the second scenario, an array of EEG sensors built into a mixed reality headset enables the user to interact with the interface hands-free. What we described above are manifestations of commoditized EEG that we think will be on the market in a 5-10 year timeframe. A number of consumer-focused standalone EEG hardware devices are already available today (there are also many research- and clinical-grade headsets, but these are woefully unergonomic and cost tens of thousands of dollars).

Neurable BCI Development Kit

- Medium of innovation: Integrated

- Device-type: Record

- Form-factor: Glasses

- Effect-type: Control, Developer

The Neurable BCI Development Kit includes both hardware and software: the hardware is a headband with dry EEG electrodes that can attach to the HTC Vive VR headset, and the software is an SDK that enables developers to leverage the EEG signals to control virtual reality applications. One of their demonstration videos features a simple virtual reality game in which users can pick up objects just by thinking, then throw those objects at targets, again just with thought. Neurable has a Unity plugin that enables developers to quickly start using brain control for their virtual reality applications.

Emotiv Epoc+

- Medium of innovation: Integrated

- Device-type: Record

- Form-factor: General Headset

- Effect-type: Control, Feedback, User Characterization, Developer

The Emotiv Epoc+ is an $800, 14-channel EEG device. It can be used with an ~$80 / mo. / seat subscription to the EmotivPRO software suite, which gives researchers and developers convenient ways to observe and use the neural signals recorded by the headset. Users can pay an additional $80 (one-time fee) for the BrainViz software to visualize EEG data. Emotiv also offers a software package called EmotivBCI, a free tool that lets developers use the EEG headset for brain-computer interfaces. Developers can train the headset to distinguish up to four commands, such as the hypothetical command to “open this notification.” EmotivBCI also features signals about the user’s mental state, which could in principle be used in a manner similar to the autonomous car scenario above.

OpenBCI

- Medium of innovation: Integrated

- Device-type: Record

- Form-factor: General Headset

- Effect-type: Control, Feedback, User Characterization, Developer

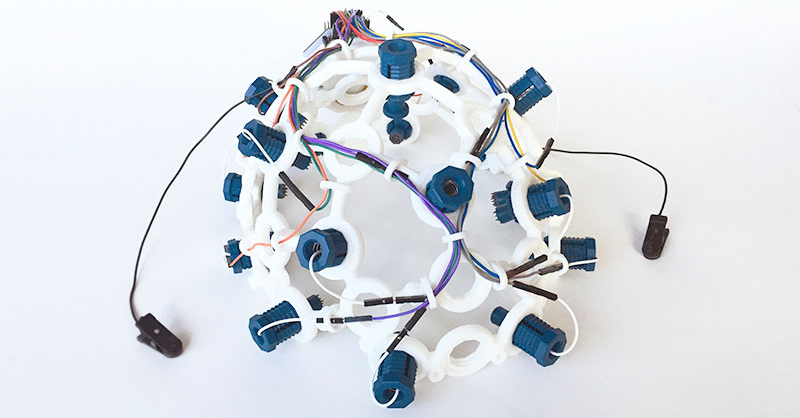

OpenBCI creates a series of partially DIY, open-source EEG electronics boards. They offer a 4-channel version for $200, an 8-channel version for $500, and a 16-channel version for $950. Customers can also purchase a 3D printed headset with electrodes, and this can cost between $350 and $800 depending on the degree of assembly the customer wants to undertake on their own.

Takeaways

- For standalone EEGs, there’s an opportunity around software for processing signals and making them accessible to developers. Emotiv’s EmotivPRO software capitalizes on this opportunity, and we’re aware of others pursuing this path as well. The goal is to create a simple interface for non-neuroscientist/neuroengineer developers that lets them incorporate aspects of Control and User Characterization into their products. EEG analysis is complicated, and many patents exist around just the manipulation of EEG data; abstracting the signal processing makes it possible for non-specialists to leverage the benefits of EEG.

- It’s reasonable to consider what an EEG marketplace might look like. Hypothetically, we can see two types of products: those that leverage the EEG for Control or User Characterization in another user-facing application, and those that give developers new ways to use the EEG signal itself. The latter seems like it could lend itself to a marketplace akin to the Atlassian Marketplace, e.g. where Neurable developers could purchase additional apps that perform complex and specialized processing on the raw EEG data; Neurable would take a cut of these purchases/subscriptions. In other words, we think there’s an opportunity for a “data processing marketplace” that offers different ways to act on the data created by the hardware. In contrast, it doesn’t make sense to build a standalone marketplace for user-facing EEG-enabled software, since the software will run on another device or operating system (e.g., iOS), and be distributed through that platform natively (e.g., iOS App Store).

- We don’t see standalone scalp EEGs ever succeeding at scale like smartphones, because EEG listens to users but can’t tell them anything. In contrast, smartphones “listen” through their touch screen and “speak” by displaying visuals on the screen; augmented reality glasses “listen” to gesture, voice, and gaze input, and “speak” through their displays as well. Imagine asking a user to constantly wear an augmented reality headset with its lenses removed: it would never happen because that device couldn’t “speak” to the user.

- We do, however, think that most future XR headsets will incorporate EEG, since those devices already need to be worn on the head. Neurable is focusing their efforts here: as Neurable’s CEO remarks, virtual/augmented/mixed reality will never find it’s “killer application” until it finds its “killer interface.” EEG in the form-factor of XR headsets is likely a large part of this killer interface—anyone who has spent time using or developing XR applications understands the difficult constraints around using hands or voice for input. Thus, in evaluating the size of the Commoditized EEG market, it makes sense to consider that consumer EEG will likely proliferate as a function of XR, and not of its own accord.

Use-Cases and Market Size

To estimate the size of the Commoditized EEG market, we estimate the markets that will involve Commoditized EEG as part of the respective technologies. By our logic above, we believe this to be mostly limited to the XR market, where XR headsets will incorporate EEG sensors, just like smartphones incorporate microphones. This market has two components: the revenues generated by the introduction of EEG into hardware, and the revenues generated by EEG-enabled software.

To model the hardware component, we use our internal AR/VR models and estimate the percentage of EEG-enabled devices for a given year.

To model the software component, we assume that every application available on an XR headset which leverages EEG will either use OS-level integrations (such as an EEG version of a mouse click) or will require custom analysis of EEG signals (a custom analysis seems reasonable because there are many patents pertaining to EEG signal processing methodologies for very specific applications). Therefore, if we take the number of XR devices with EEG capabilities, multiplied by the number of applications the average user uses per year, multiplied by the fraction of applications requiring unique signal processing (a guesstimate), then we get a gross estimate of how many different seats will be required for signal processing packages. If we assign an average price per seat to this, then we can roughly estimate a market size.

We must therefore estimate three additional values: the number of apps a user uses per year, on average; the number of apps requiring custom EEG signal processing; and, the average spend per user per year per application (glossing over the distinction between consumer and enterprise). We estimate the number of applications based on a report from App Annie; we guess the percentage of custom-EEG applications will be 20% of all applications and choose this small value because EEG signal processing is a specialized skill, subjecting EEG-enabled applications to talent constraints; finally, we ad-hoc guess the annual spend per user per application to be $5 (this represents an average of the few expensive applications and the many cheap/free applications). We’re more bullish on AR/MR than on VR; our estimate is that in 2025, EEG-enabled Augmented/Mixed Reality technology will be involved in $71.6B in revenue.

EMG Human-Computer Interfaces

Fiction

Step, step, step—you pace forward, across the room from the projector. About-face, then begin the journey back. Four years ago when you founded your company, you dreamed of many things. You dreamed of the day you’d open a door to your own office in your own building. You dreamed of the day you’d have enough of a staff tackling bugs and engineering challenges that your primary cognitive output would be strategic planning, the domain where you feel your talent can shine unencumbered. You turn again and keep pacing. Omnigraffle is open on the screen, and between steps you pause for a moment to fidget with the armband on your dominant forearm. You reflect on the fact that, somehow, these dreams are now the reality—here you are, deep inside a flow-chart, planning out the next five years of your business, walking around your own office in your own building. Beginning a gratitude journal was one of the best decisions you ever made; with a twitch of your hand, you pull open your journal on the screen, planting hands on hips and feet towards the screen. With no keyboard necessary, you subtly twitch your forearm again, and your words of appreciation show up on the screen. After thirty rapid seconds of typing, you’re glowing inside, and—oh!—another potential R&D direction comes to mind. You’re walking again, now with arms crossed, and with just a pinky, you bring Omnigraffle back to the front, ready to add to the chart. Twitch, and there the idea is, up on the screen.

• • •

Electromyogram armbands can translate muscle movements and/or twitches into digital signals which can then be interpreted to understand the motion (or intended motion) of a user’s hand or arm. Described above is the effortless use of a near-futuristic EMG armband for Control. If a consumer product can record high-fidelity EMG signals, these signals can be used to reconstruct arm and/or hand movement. This opens up new ways to control technology and can be achieved in an ergonomic form-factor since EMG hardware can be miniaturized into an armband.

Myo Gesture Control Armband

- Medium of innovation: Integrated

- Device-type: Record

- Form-factor: Armband

- Effect-type: Control, Developer

[Note: a few weeks after the publication of this piece, Thalmic Labs announced that they had rebranded as North, a company which produces fashion-conscious smart glasses. The Myo Gesture Control Armband is no longer sold. We’ve left the content below intact, as it existed prior to the announcement.] To motivate the EMG opportunity, we’ll discuss two well-funded startups that are developing EMG Control interfaces. The first is Thalmic Labs, which builds the Myo Gesture Control Armband. These armbands are already shipping and cost $130 per unit. Thalmic Labs also has a marketplace for Myo-compatible applications; some apps are developed in-house and some by third-party companies, such as a $50 music software product called Leviathan. Leviathan lets users interface with other music software using the Myo armband. By way of example, Leviathan lets DJs adjust the volume of a track by moving their arms. Thalmic Labs has raised $135.6M.

CTRL-kit

- Medium of innovation: Integrated

- Device-type: Record

- Form-factor: Armband

- Effect-type: Control, Developer

The second company pursuing an EMG armband is CTRL-labs. CTRL has raised $39M to develop an armband that can record muscle twitches. The ultimate goal is to replace typing and other input interfaces by capturing a user’s “intention” to move without the user having to actually contract the muscle and execute the movement. CTRL has a demonstration where they show their ability to reconstruct a user’s intended hand movement when another person is holding the user’s fist closed. CTRL is still in the development phase: the development kit is supposed to ship in late 2018. There’s no indication about the price of the developer kit, but given that the highest-end keyboards and mice cost a few hundred dollars, it’s hard to imagine spending more than that on a device to control a computer.

Takeaways and Use-Cases

- To list some use-cases that are already possible, or may soon be: a surgeon can control equipment in an operating room without becoming distracted from the current task. A DJ can adjust music volume and lighting using her arms. Complex machinery like factor components and automobiles can be intuitively controlled. When paired with AR/VR, this technology can enable engineers and artists to use their hands as their primary tool for creating and modifying 3D models. A programmer can hop between files by twitching her pinky. Anyone can type rapidly with their hands on hips or arms folded. Extrapolating, the big insight of EMG armband technology is that controlling computers and machines will require less physical activity, particularly for tasks that require extensive movement right now, such as typing and 3D model manipulation. EEG, in contrast, will probably be better for quick, frequent commands like navigating through menus and selecting items on-screen.

- Sizing this market is unrealistic because it would involve exhaustively enumerating the use-cases—if this technology eventually works as advertised, the market will be gigantic: perhaps as large as the set of use-cases that currently require a mouse and/or keyboard. Mice and keyboards aren’t large market opportunities, but EMG is subject to different constraints because a) the components of an armband will probably be more expensive, and b) EMG armbands won’t be sold with every computer, so the business models will operate differently (imagine requiring a user to wear a different armband for every computer or tablet they use regularly).

- EMG armbands have two characteristics that make us think they can succeed at scale: a) the armband form-factor is already socially acceptable (watches, smartwatches, fitness trackers, bracelets, etc.); and b) EMG armbands can provide many degrees of freedom to control a computer. The CTRL-kit, for example, seems to be able to capture as many degrees of freedom as the human hand has.

- Thalmic Labs is making a platform play by attempting to own the marketplace for apps that use its interface. We aren’t sure whether this is sustainable. If we assume the ultimate goal is to make this control interface ubiquitous, then many apps in different ecosystems like iOS or a mixed reality platform will utilize the control input. Large-scale proliferation of EMG armbands seems incompatible with a marketplace business model, since so many of the integrating applications are distributed through incumbent marketplaces.

Conclusion

In Volume II of our five-part series on Consumer Neurotechnology, we discussed neurotechnologies that enable their users to control computers in new ways. In Volume III, we’ll look at technologies that use neurofeedback. Stay tuned!

- Volume I: Introduction

- Volume II: Neurotech for Control

- Volume III: Neurotech for Feedback

- Volume IV: Neurotech for Brain Modulation

- Volume V: Other Neurotech (The Odd Ones Out)

Disclaimer: We actively write about the themes in which we invest or may invest: virtual reality, augmented reality, artificial intelligence, and robotics. From time to time, we may write about companies that are in our portfolio. As managers of the portfolio, we may earn carried interest, management fees or other compensation from such portfolio. Content on this site including opinions on specific themes in technology, market estimates, and estimates and commentary regarding publicly traded or private companies is not intended for use in making any investment decisions and provided solely for informational purposes. We hold no obligation to update any of our projections and the content on this site should not be relied upon. We express no warranties about any estimates or opinions we make.