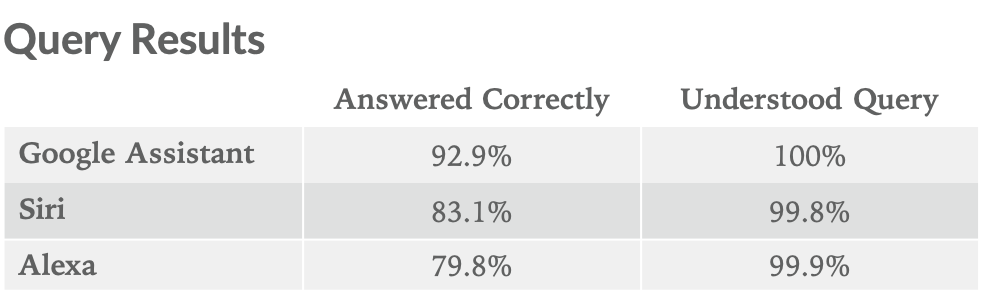

We recently asked the leading digital assistants, Google Assistant, Siri, and Alexa, 800 questions each. Google Assistant was able to correctly answer 93% of them vs. Siri at 83% and Alexa at 80%. Each platform saw improvements across the board vs. one year ago. In July of 2018, Google Assistant correctly answered 86% vs. Siri at 79% and Alexa at 61%.

As part of our ongoing effort to better understand the practical use cases of AI and the emergence of voice as a computing input, we regularly test the most common digital assistants and smart speakers. This time, we focused on smartphone-based digital assistants. See past comparisons of smart speakers here and digital assistants here.

We separate smartphone-based digital assistants from smart speakers because, while the underlying technology is the same, use cases vary. For instance, the environment in which they are used may call for different language, and the output may change based on the form factor; e.g., screen or no screen. We account for this by adjusting the question set to reflect generally shorter queries and the presence of a screen which allows the assistant to present some information that is not verbalized.

We have eliminated Cortana from our test due to Microsoft’s recent shift in Cortana’s strategic positioning. Read more about what has changed with Cortana here.

Methodology

We asked each digital assistant the same 800 questions, and they are graded on two metrics: 1. Did it understand what was being asked? 2. Did it deliver a correct response? The question set, designed to comprehensively test a digital assistant’s ability and utility, is broken into 5 categories:

- Local – Where is the nearest coffee shop?

- Commerce – Order me more paper towels.

- Navigation – How do I get to Uptown on the bus?

- Information – Who do the Twins play tonight?

- Command – Remind me to call Jerome at 2 pm today.

Note that we slightly modify our question set before each round of testing in order to reflect the changing abilities of AI assistants. This is part of an ongoing process to ensure that our test is comprehensive.

Testing was conducted using Siri on iOS 12.4, Google Assistant on Pixel XL running Android 9 Pie, and Alexa via the iOS app. Smart home devices tested include Wemo Mini plug, TP-Link Kasa plug, Phillips Hue Lights, and Wemo Dimmer Switch.

Results and Analysis

Google Assistant was once again the best performer, correctly answering 93% and correctly understanding all 800 questions. Siri was next, answering 83% correctly and only misunderstanding two questions. Alexa correctly answered 80% and only misunderstood one.

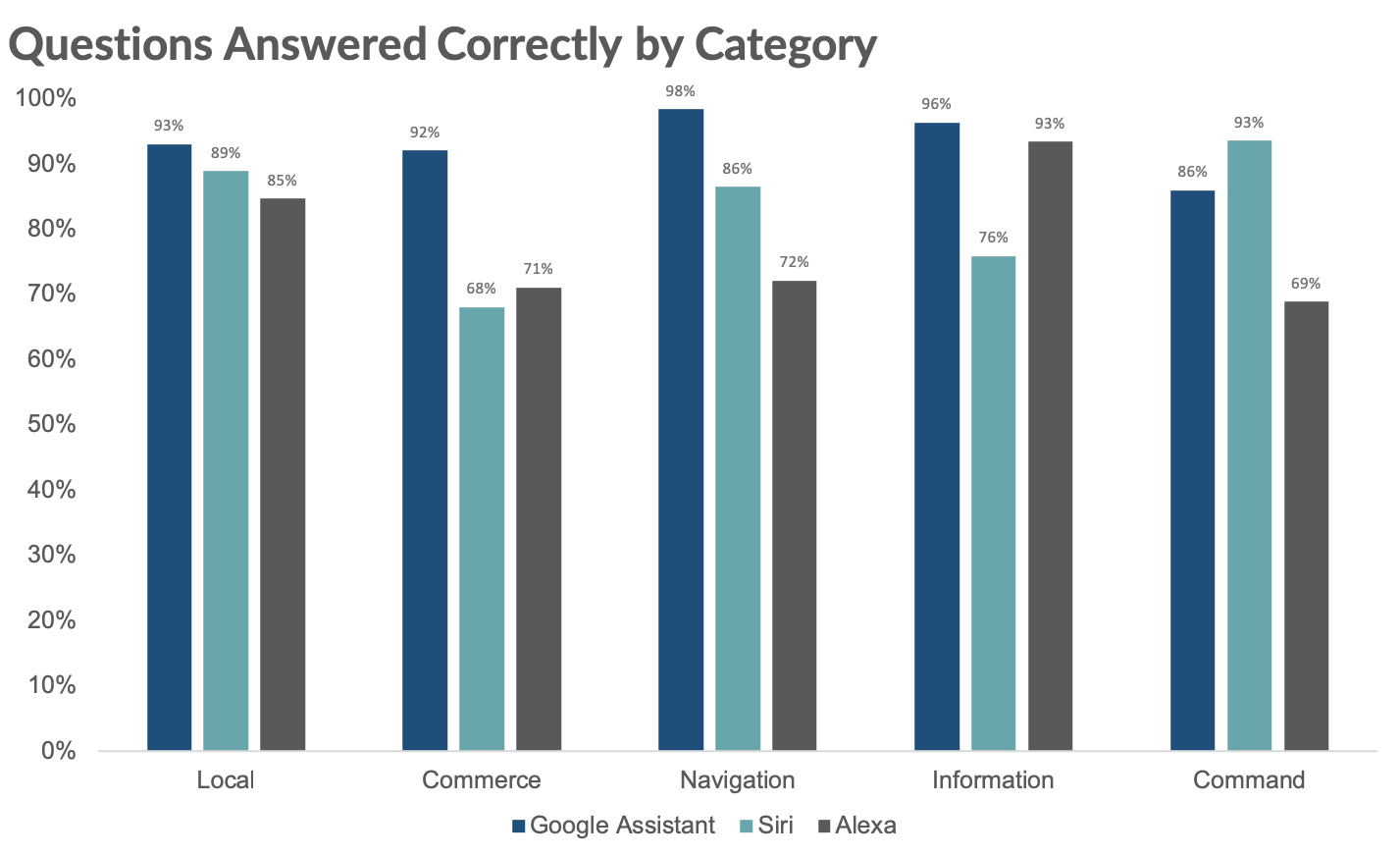

Google Assistant was the top performer in four of the five categories but fell short of Siri in the Command category again. Siri continues to prove more useful with phone-related functions like calling, texting, emailing, calendar, and music. Both Siri and Google Assistant, which are baked into the OS of the phone, far outperformed Alexa in the Command section. Alexa lives on a third-party app, which, despite being able to send voice messages and call other Alexa devices, cannot send text messages, emails, or initiate a phone call.

The largest disparity was Google’s outperformance in the Commerce category, correctly answering 92%, vs Siri at 68% and Alexa at 71%. Conventional wisdom suggests Alexa would be best-suited for commerce questions. However, Google Assistant correctly answers more questions about product and service information and where to buy certain items, and Google Express is just as capable as Amazon in terms of actually purchasing items or restocking common goods you’ve bought before.

We believe, based on surveying consumers and our experience using digital assistants, that the number of consumers making purchases through voice commands is insignificant. We think commerce-related queries are more geared toward product and service research and local business discovery than actually purchasing something, and our question set reflects that.

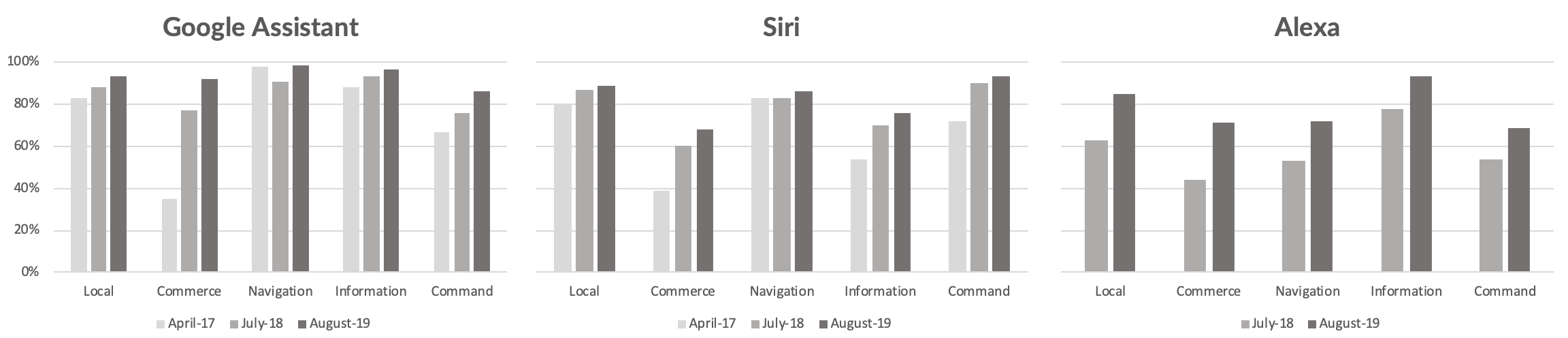

Overall, the rate of improvement of these systems continues to surprise us. We perform this test two times per year and see improvement across each assistant in each category every time. Many of the same trends continue; Google outperforms in information-related questions, Siri handles commands best, and the ranking of utility based on the number of questions answered has remained the same (Google Assistant, Siri, Alexa), but there have been dramatic improvements on each platform and in each category in the few short years that we have been tracking the progress of digital assistants.

Improvement Over Time

As measured by correct answers, over a 13-month period, Google Assistant improved by 7 percentage points, Siri by 5 points, and Alexa by 18 points.

Alexa made significant improvement across all five categories, most noticeably in Local and Commerce. While Alexa’s 18 point jump still leaves it behind Siri and Google Assistant, it represents the largest jump in correct answers year over year that we have recorded. The chart below shows the improvement of each assistant over time broken down by category.

With scores on our test quickly approaching 100%, it may seem like digital assistants will soon be able to answer any question you ask them, but we caution this is not the case. Today, they are able to understand, within reason, everything you say to them, and the primary use cases are well built out, but they are not generally intelligent.

Further improvements will come from extending the feature sets of these assistants. New skills are hard to discover and, therefore, don’t get used. To become a habit, new use cases need to be well understood, simple to use, and solve a problem that voice is uniquely suited to solve. We will continue to refine our test to be sure it addresses these new use cases as they emerge.