We’ve written before about our thoughts on AirPods and “hearables” — ear-worn wearables for audio augmented reality:

We believe audio as a UI is a key enabler of AR technology. AirPods may not be perfect, but they’ll get better, smarter, and easier to use. They are just the beginning for hearables and a new wave of computing.

AirPods Are Unlocking Audio AR

Everyone seems to be waiting for Apple to announce “iGlasses” for visual augmented reality. Some argue the iPhone is the central piece of Apple’s AR platform; lenses, a processor, and a display serving as a window into a visually augmented world.

We think Apple’s ecosystem of devices is the critical asset for Apple’s strategy in AR. In other words, the interaction between iPhone, Apple Watch, AirPods, and — yes — some future headworn wearable, is how Apple will deliver a unified augmented layer or layers.

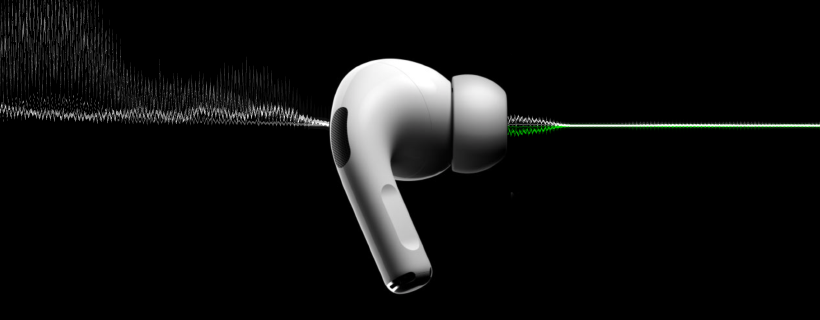

When Apple announced AirPods Pro, we were excited to test them out through that lens. How do AirPods Pro advance Apple’s AR strategy? Here’s our take on the new AirPods and the state of the union of audio augmented reality.

In addition to existing features like Hey Siri and Audio Sharing, which we view as audio AR, Apple added Transparency Mode to the AirPods platform.

- Transparency Mode: Passes through audio from a user’s surroundings that would otherwise be blocked out by the noise-canceling headphones. Listen to music on a bike ride, for example, while you’re in Transparency Mode, so that you can hear the sounds of nearby traffic.

- Hey Siri: Allows a user access Siri controls on a connected device hands-free by simply saying “Hey Siri.” This feature adds a user interface to the AirPods that enables much more complex computing than the buttons and sensors on the headphones could otherwise handle; for example, making calls or starting a certain podcast or song is only capable directly via AirPods because of Hey Siri controls. In our view, Hey Siri is a user interface that enables an audio AR layer with AirPods.

- Audio Sharing: Makes it possible for an AirPods user to share her music with another AirPods user simultaneously. Skip the idea of sharing a pair of headphones and listen to Spotify together as you walk down the street.

The Possibilities of Audio AR

Beyond Hey Siri and Transparency Mode, here’s what we envision when we consider audio AR in the future and what Apple’s ecosystem is capable of delivering:

- Hearable Spaces: Imagine walking through Central Park listening to an audiobook that takes place in Central Park. An audiobook with Hearable Spaces could navigate the reader through a story while experiencing the story’s location physically in real-time. Wikipedia could bring the physical world alive with its information plus an audio AR layer; museums could automate tours; retail stores could enrich their merchandising with additional product information; drive thrus could be a thing of the past.

- Comfort Zones: Similar to Hearable Spaces, which actively provide an audio track to the user based on the surroundings, Comfort Zones in an office or a park could provide a calm, quiet, audio-enabled experience based on a specific location.

- Crowd Noise: Live sports, gaming, and esports could be augmented by a real-time crowd noise audio track. Use the microphone on AirPods to gather the reactions of the audience, mix the audio into a single track of crowd noise, and feed that track back to the audio to augment the live viewing experience.

- Live DJ and Book Club: What if an iMessage chain could also feature a live audio track that every member of the group could hear simultaneously. Listen to a playlist together, remotely, but in real-time, or read an audiobook with your book club and text your thoughts to the group.

- Hello, My Name Is: Siri could notify you of someone’s name before entering a room or upon encountering a person you’ve met based on location and/or nearby iOS devices.

- Selective Hearing: Identify a speaker in a crowd of people and augment the volume of that speaker via a nearby iOS device and AirPods. iOS already has an accessibility feature called Live Listen that treats the iPhone as a remote microphone and the AirPods as a hearing aid. Take this feature mainstream.

- Remote Listening: Listen to an event remotely via an iOS device at that location and your AirPods. This would require double opt-in from the source and the recipient. It could work much like the crowd-sourced location information Apple is gathering from the network of iOS devices around the world for the new Find My app. Selective Hearing could leverage the global network of iOS devices to share audio from a certain location to your AirPods.

- Teleportation: Users could enable the same type of audio sharing within their private network of iOS devices. Tap into your HomePod to check in at home.

- Personal Trainer: A coaching layer could provide health and wellness suggestions to a user throughout the day in real-time. Just like the Apple Watch has Stand Reminders, the Activity app could provide tips to improve your health based on biometrics gathered by AirPods. Or with permission to persistently listen to what a user says throughout the day, a coach could provide feedback on communication skills, parenting, managing others, etc.

Feedback Loup: AirPods Pro

- Bottom Line: I own two pairs of AirPods (gen 1). One pair was lost in a parking lot for an entire Minnesota winter and was found in the spring in need of nothing more than a charge. I loved the AirPods Pro that I tested, and I’ll upgrade eventually, but not until my other AirPods die, get lost, or find a new owner.

- Pricing: AirPods is a computing platform, not a commodity that should be expected to ship in the box with every new iPhone. The $249 price point for AirPods Pro reminded us of Jeff Bezos’s now famous summary of Amazon’s (and Apple’s, I think) pricing strategies: “There are two kinds of companies, those that work to try to charge more and those that work to charge less. We will be the second.” Apple is the first. With AirPods Pro, Apple is working hard to charge more (and still deliver great value).

- Active Noise Cancellation: Worked better than I expected a pair of in-ear buds to work. Certain sounds, like a white noise machine or other background sounds, are completely eliminated while others, like a loud conversation, are not. In one example, working out with the Peloton app and AirPods Pro, Noise Cancellation mode was nirvana. The gold standard for noise-canceling headphones is an airplane; unfortunately, I haven’t yet tested AirPods Pro on a plane. I’m not optimistic that they’ll suitably replace a pair of Bose QuietComfort headphones, but they may do the trick without all the added bulk of those Bose monsters for light travelers.

- Transparency Mode: Also worked better than I expected. I was imaging an experience similar to an old pair of hunting ear muffs I used to protect my ears from the sound of a gunshot but allow for conversation. Transparency Mode is much more natural, especially after 30 seconds or so of normalization. The problem with Transparency Mode is that other people don’t know you’re in Transparency Mode. Subtle lights on the end of each AirPod could become a signal to others, but social norms around always-on earphones will limit what’s possible here.

- Look & Feel: The bigger case was a concern but I found it to fit in my pocket just as nicely as the (smaller) original AirPods case. AirPods Pro take longer to get into your ears, especially if you take care to get a nice seal between the tip and your ear. In fact, when I went back to my original AirPods, I found the speed of putting them in to be an “upgrade.” The longer stem of the original AirPods seems more consistent with the stem of the corded EarPods — an ionic design. The shorter, curved stem of AirPods Pro lacks that same iconic look but achieves a simpler, more discreet look that is probably a net-positive.

Conclusion

AirPods Pro is a big step forward in Apple unlocking audio as an AR layer. More specifically, we see several layers of audio augmented reality emerging:

- Information layer: The examples of Hearable Spaces and Personal Trainer outlined above represent an information layer to audio AR. Users opt into additional audio-based information to augment their surroundings and provide helpful context.

- Entertainment layer: Audio as entertainment is nothing new, but use cases like Crowd Noise for live sports, Remote Listening, and Comfort Zones for unique aural experiences enable new forms of real-time audio-based entertainment.

- Social layer: Social listening layers like Live DJ and Book Club for shared content could enable groups to connect in new ways. Selective Hearing and Teleportation would also allow users to tap into audio environments independent of their actual location for a new type of social experience.

- Notification layer: Unlike the information layer, the notification layer is pushed to a user (following user consent). Capabilities like Hello, My Name Is could expand on the existing notifications that Siri provides via AirPods today.

We’re encouraged by the opportunities we see in the audio AR space. Apple, Google, and Amazon will dominate the ecosystem, but we expect each of them to open up their audio AR platforms for developers to invent new forms of audio experiences.