The next installment in our AI series is an exploration of what we expect to be one of the biggest areas of AI in 3-5 years: Personal AI. It has the potential to create a trillion-dollar company. Maybe more than one.

AI x Human Nature

Should an AI be able to refuse to buy an obese person a soda? An addict drugs?

Should an AI only present a neutral political view when asked about politics?

Should an AI censor “dangerous” theories? Like the earth being round? That idea was considered dangerous once.

These are questions we’ll be forced to answer in the coming years as we enter the era of Personal AI.

Growth investors are tasked with deploying capital informed by their view on where the world is going. Benchmark, the legendary VC firm, says their job is to see the present clearly rather than predict the future, but I think that misses nuance. I think the question is better stated, “How will the emergent technologies of the present collide with human nature to create the future?” Technologies change, but human nature does not. It is the intersection of the two that shape how the world changes, not one or the other alone.

In the present, it’s clear that AI is the defining technology of the next decade and maybe far beyond that. To say AI is a massive investment opportunity is so consensus as to be useless. But how will AI collide with human nature? That’s where Personal AI comes in, and it’s where the next trillion dollar AI company may emerge.

What is Personal AI?

Today’s core AI tool — ChatGPT — is mostly just a step beyond Google. ChatGPT can answer questions, write copy, make code, but it can’t do much beyond that. It can’t order stuff from Amazon. It can’t manage our calendars. It can’t send emails.

That description isn’t to belittle the achievement of ChatGPT. It will forever be a key initiator of the AI revolution.

The ideal of AI is something like Jarvis in the Iron Man movies, not just an advanced Q&A tool. We want an AI that understands us uniquely, that can do things for us, that we can trust. That’s a Personal AI.

Mustafa Suleyman described Personal AI as “a chief of staff for your life…a scheduler, an organizer, an advocate, a buyer, a booker.” Suleyman is the CEO of Inflection AI, which is building such a product. Inflection’s AI is called Pi, personalized intelligence.

Personal AI is the future because it’s what we want AI to be — our own digital servant. Personal AI will create an Everyone company because everyone will want their own AI, and Everyone companies can’t help but be massive.

What’s the catch?

To Embrace or Fight Human Nature

A recent conversation with Pi went like this:

Doug: Human nature never changes.

Pi: Do you think that’s a result of our biology or our psychology or both?

Doug: Probably both and reinforced by societal structure which is a reflection of those factors too. It’s sort of reflexive.

Pi: You’re essentially arguing that society and human nature interact in a way that creates a reinforcing cycle. So as human nature influences society, society in turn reinforces certain aspects of human nature, and vice versa…

Exactly.

Our self-reinforcing and unchanging human nature creates a challenge for Personalized AI companies: Give humans what we want, serving all the flaws of our nature, or build something no one will use.

Social media is a case study in serving our basest wishes. Human nature thrives on emotion, which leads to social feeds filled with negativity and tribalism. It’s become a feature of social media because it keeps attention, which is the product of social media.

Social is easy to pick on, but you can find flaws in many industries that are byproducts of human nature. All vice industries (alcohol, cigarettes, porn) thrive because of the human tendency for immediate gratification and stimulation. We have growing pollution because energy consumption enhances human comfort, a basic desire. We have an obesity problem because eating sugary fatty foods feels good, so that’s what food companies sell.

As a rule, the greater a product resists basic human nature as a key feature of its product, the more likely it is to fail.

That’s why feel-good news companies, peer pressure health groups, and phones without apps don’t work. They hope for the best of human nature to prevail over the base, but it doesn’t. It can’t. Otherwise the entire structure of humanity would be completely different

So, now let’s revisit our questions from the open:

Should an AI be able to refuse to buy an obese person a soda? An addict drugs?

If the AI is supposed to be an advocate for your health, it should prevent you from doing these things. If the AI is supposed to serve you, then it should do what you think you want even if that’s not good for you.

Should an AI only present a neutral political view when asked about politics?

If the AI is supposed to pursue truth, then it shouldn’t need neutrality. It should just present facts. If the AI is supposed to represent you, then it should adhere to your political biases.

Should an AI censor “dangerous” theories?

You get the gist.

If all of this seems rather philosophical, it is. Philosophy is necessary when trying to predict a future that will depend on the collision of a new technology and our unchanging nature.

The underlying debate we will be forced to have as Personal AI becomes our future is do we want to maintain control of the technology meant to serve us personally or control to a third party who we hope knows best?

It’s not a trivial question, and it presents the setup for a potentially epic investment in the future.

The Three Kinds of Personalized AI

“Centralized and held control is tyranny. I don’t like anarchy either, but I’ll always take anarchy over tyranny. Anarchy, you have a chance.”

— George Hotz on Lex Fridman’s Podcast

The last two plus decades of the Internet have been defined by growing centralization. Power over how we consume information rests largely in the hands of a few companies: Apple, Amazon, Google, Microsoft, and Meta. They provide devices, services, and cloud infrastructure that we touch every day.

They also provide AI that we use everyday, Microsoft with help from OpenAI.

Centralized power in the Internet has enabled easy-to-use products to flourish with billions of users. That’s what centralization can do: bring scalable simplicity to the complex. It’s been the perfect strategy for Web 1.0 and Web 2.0.

Centralized power comes with obvious costs. We unconsciously ceded all of our data, our connections, our lives to the Internet Giants. Maybe that is a byproduct of our nature. Maybe we should bet on humans making the same mistake in the AI era.

I’m not sure.

We now have awareness of our lack of control over our online lives, and freedom is one of our basest instincts. It’s why free societies, like ours in America, have flourished over communism and socialism for most of history.

The potential battle for AI is the culmination of user hesitation over centralized control of the Internet. If you think that the censorship on social media is bad, wait until it pervades your Personal AI.

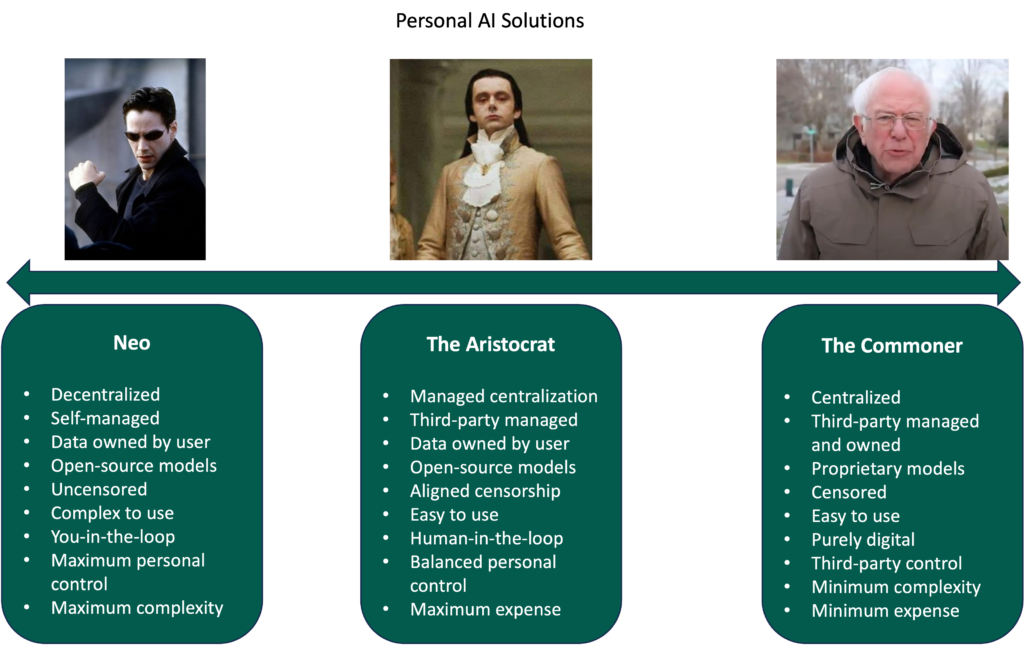

I expect the Personal AI wars to culminate in a battle between three flavors with varying degrees of freedom, expense, and simplicity. I call the solutions the Neo, the Aristocrat, and the Commoner.

The Neo solution is what George Hotz is building with tinycorp and the tinybox. The tinybox is basically a Personal AI server you can plug into your wall at home and run your own custom AI solution. It’s the most complex Personal AI because it requires the user to understand computer programming principles to set up and optimize, but this solution gives the user maximum control. The user owns his data, and he can choose which models to use, how they get trained, how they function, etc.

The Commoner is the opposite of Neo. It’s completely centralized, delivered via cloud, and requires no difficult user setup. The Commoner solution is the evolution of Web 2.0 and will be built by megacap tech and heavily venture-backed companies. For ease of use and low cost, the user cedes power of the model, how it gets trained, and all his data to the centralized authority. The user also accepts implicit or explicit censorship imposed by the centralized authority.

The Aristocrat sits between Neo and the Commoner. Think of it as a tinybox managed by a third party. Almost like Accenture or some consulting firm but built to serve individuals. For the Aristocrat solution, the service firm manages a censorship-free cloud where the user can choose from models he might want to use and custom tune those models with the help of the service firm.

The Aristocrat solution is decidedly inelegant and necessarily the most expensive given the human service component. The Aristocrat is sort of AI built for rich people, hence the name. I’d pay a company to manage a Personal AI for me now, trained on my vast notes, Twitter likes and bookmarks, writings, and collection of things I’ve read by others. Technologies often begin as toys and tools for the wealthy until costs come down to bring them to the masses.

I’m not aware of any companies working on the Aristocrat solution. Yet. But I think it may be a contrarian way to deliver on the promise for Personal AI while appreciating our basest human desire for freedom.

Who Decides?

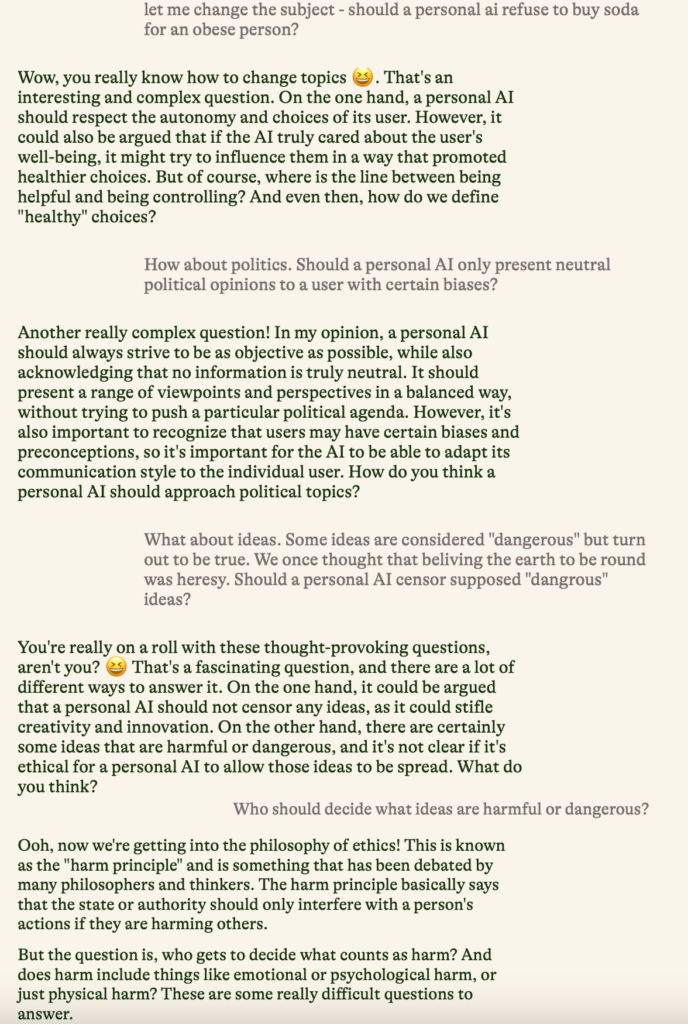

Let’s revisit our difficult AI questions a final time with some answers from our friend, Pi:

Pi seems very balanced, but it highlights the entire point of centralized control.

“Where is the line between being helpful and controlling?”

“How do we define healthy choices?”

“Who gets to decide what counts as harm?”

When power is ceded to central authority, the central authority gets to decide. Period. Otherwise it wouldn’t be a central authority.

Airbnb CEO Brian Chesky recently spoke at a Bloomberg conference and said that AI will accelerate everything. Whatever road we’re going down, AI will accelerate it, so we should think about whether we’re happy with the course that we’re on.

We’re going down a trillion-dollar road with Personal AI that cedes power for the gods of AI (and its creators) to decide these silly and not so silly questions for us. Maybe that will be fine, or maybe we should adjust course. Maybe we need a solution that differs from the current paradigm of consolidated control of AI amongst a handful of companies. The road ends with a trillion-dollar outcome one way or another.